Cloud Cluster with Elastic Scaling in Kubernetes - Overview

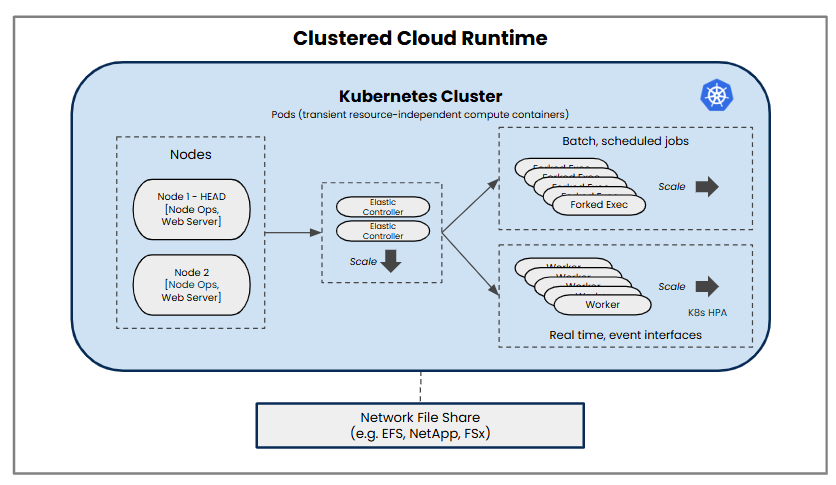

The elastic executions is a runtime cloud feature that takes advantage of native Kubernetes scaling capabilities to scale the Boomi runtime at a lower level of granularity. Therefore, some basic understanding of Kubernetes is needed.

In node-based scaling clouds, integration processes; such as, forked executions or execution workers, run as multiple JVMs within each cluster VM. In an elastic scaling cloud, those processes run as individual Kubernetes pods, outside the Boomi runtime nodes, enabling independent and more granular scaling. That means the Boomi cluster nodes will be fewer, smaller, and static, while the execution related pods come and go.

Kubernetes uses a two-factor algorithm for scaling. This takes into account both Boomi application metrics (like, concurrent executions) but also actual server resource utilization (CPU, memory). This is used to determine when to spin up additional execution workers as well as when to spin up or spin down the infrastructure nodes and/or servers powering the Kubernetes cluster.

Elastic Execution in your current cloud environment

If planning to enable an existing runtime cloud to use elastic executions, we recommend creating a new runtime cloud installation to familiarize yourself with the capabilities and configurations, especially if you are also new to Kubernetes deployments. Once familiar you can plan to apply the same changes to your previous runtime cloud.

The following table details the differences between elastic scaling and node-based scaling clouds.

| Elastic scaling with Kubernetes | Node-based scaling |

|---|---|

|

|